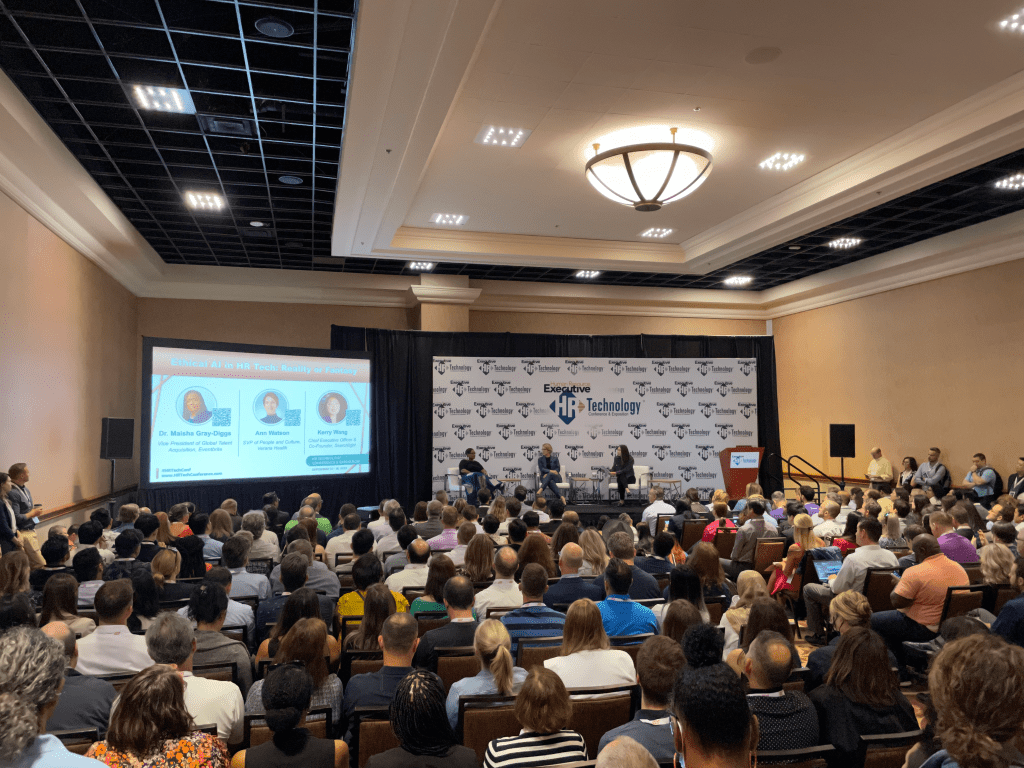

At the HR Tech Conference this last September, I had the privilege of being on a panel titled “Ethical AI in HR Tech: Reality or Fantasy,” along with Dr. Maisha Gray-Diggs, VP of Talent Acquisition at Eventbrite (previously at Twitter) and Ann Watson, SVP People and Culture at Verana Health. We talked about the benefits and use cases for AI in HR technology (short answer: a lot!), the privacy and bias issues that AI can cause, how vendors can create ethical AI models, and how customers can pick the good ones. Our space was packed to the point of standing room only - clearly this issue is top of mind for many HR leaders.

Here are four major takeaways from our conversation.

AI is Popular - For Good Reason

AI seems to be everywhere and the shift towards it in HR tech feels inevitable. That means it’s the responsibility of People Leaders to navigate the ethical issues that come with that shift.

When asked about the use cases of AI in HR, Dr. Gray-Diggs says she sees it across the entire talent lifecycle. She believes it’s best used to improve speed while maintaining quality assurance. We humans usually compromise quality for speed, which risks mistakes. Imagine hiring in a rush, or while adjusting to remote work - there simply isn’t time to look at each candidate as closely as they would otherwise. Machines don’t have to make this same trade-off. AI can also provide useful data on recruiter capability and hiring quality that lets HR make more informed decisions.Ann added that AI brings more: more candidates, different candidates, faster candidates, or faster hires. It can improve performance, increase retention and open up capacity on the HR team for other projects.

AI Isn’t Going to Take Your Job

Another point that emerged from the conversation is that AI empowers human employees when used correctly - it doesn’t replace them. Joseph Fuller, a Harvard professor and co-lead of Managing the Future of Work, did research that found AI taking on important technical tasks of their work will free workers to focus on other responsibilities, making them more productive and therefore more valuable to employers. He predicts that jobs that require human interaction, empathy, and applying judgment to what the machine is creating will grow in importance. In this way, AI could amplify the “human” in human resources.

There was consensus that human judgment with AI will always lead to better decisions than AI itself.

Ethical Issues in AI Are Real, but Can be Controlled

The two major ethical issues in AI are privacy/survaillance, where AI is used to invade employee’s privacy, and bias/discrimination, where biased AI models adversely impact certain genders, ethnicities or groups.

The first issue often comes down to a balance between respecting employee’s privacy and gathering data to inform judgements. Ann shared that, as a general rule, she tries to use the additional data from AI for abundance, rather than scarcity. She focuses on doing more, not catching people doing less. A way to address surveillance and privacy concerns is to transparently communicate how and why AI will be used to improve life for employees. In practice, Searchlight customers see 60-80% engagement rates (2-3X industry benchmarks!) when using our templated comms to introduce our technology and ask for employees to opt-in.For the second issue, Dr. Gray-Diggs explained that having diverse subjects in AI research matters - a system is only as good as the data used to train it. In 2015, Amazon realized that an AI tool they were using to screen resumes was recommending men more often than women. This was because the AI was programmed to vet candidates by observing patterns in resumes submitted to the company over a 10-year period, and unsurprisingly, the majority of those candidates had been men. But if the AI had been programmed to observe patterns another way, such as with hiring outcomes and unbiased datasets on universal traits, it would not have reinforced biases. AI in itself is not biased.

How Do I Pick an Ethical AI Vendor?

Most of the panel’s Q&A was about how to tell if a vendor’s AI tool was ethical. Attendees told us that all AI vendors had very similar messaging, so they were struggling to tell which ones were taking ethics issues seriously (and which ones were not). There was a clear need for help making this important decision!

Ann and Dr. Gray-Diggs had several suggestions for assessing how seriously vendors are taking AI ethics. This first is to simply ask the question, “How do you know your AI is unbiased?” and see if the vendor has an answer. If they don’t, have they considered the issue? Are they forthcoming and excited, or nervous and eager to change the subject? Also, look at the team they’re bringing to the table - how diverse is it? All three of us agreed that starting off the conversation with cost or efficiency is another red flag - look for vendors that lead with empathy, kindness, and understanding of the human aspect of AI.

The good news is that vendors are starting to respond to this; bias audits are becoming more commonplace, and you can always ask vendors to share the data sources that its AI uses and check them yourself.